Over the last decades, it has become clear that the microbiome is crucial for human health, disease, wellbeing, and for developing new treatments. Metagenomics and next-generation sequencing has progressed in leaps, resulting in the collection of extensive datasets. To maximize their potential, advanced bioinformatics tools and a well-defined workflow could make all the difference.

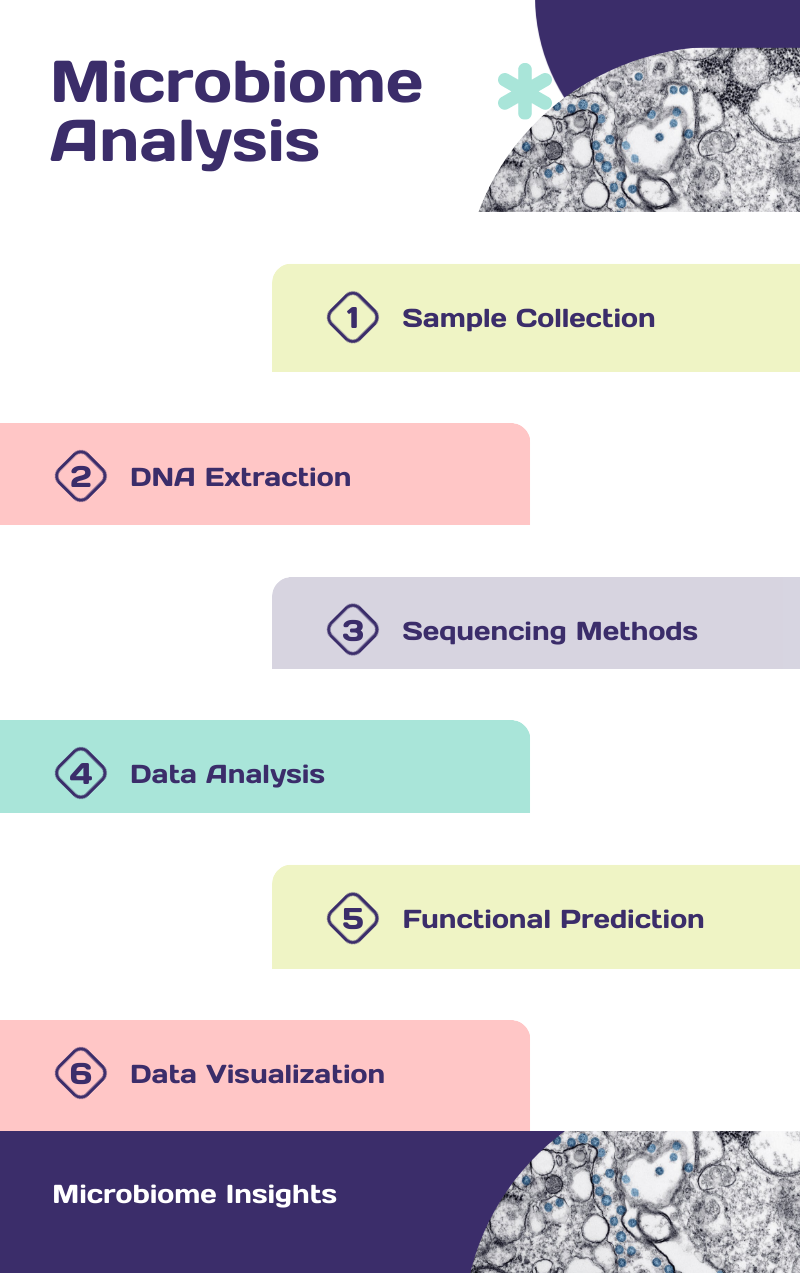

A standard workflow starts with sample preparation. Samples may include feces, saliva, skin swabs, and biopsies, or soil and water samples. Erroneous sample handling, transport, or storage could lead to contamination or degradation. It is also critical to select an appropriate sample size for clinical applications. e.g, skin samples have significantly less microbial biomass than gut samples, making it vital to collect enough samples to ensure reliable sequencing results. Microbial DNA must then be extracted very carefully using mechanical or chemical disruption, and the method should capture all the different types of microbes in the sample.

The extracted DNA must be sequenced. What used to be done with Sanger sequencing has been transformed through next generation sequencing (NGS), which can sequence millions of DNA fragments at once. This is a crucial step, and there are several approaches to sequencing that are in use, each with its own advantages.

Targeted sequencing methods use specific genetic markers (amplicons) to identify each type of microbe, such as 16S ribosomal RNA gene sequences for bacteria and internal transcribed spacer (ITS) region sequences for fungi. This is done by amplifying the target genes with barcode primers, purifying the samples, and preparing DNA libraries before sequencing. Illumina sequencing, known for its ability to generate large amounts of data quickly and cost-effectively, is well-suited for targeted marker-based studies of microbial community structure without the need for whole-genome sequencing.

Targeted sequencing has been effective for comparing microbial composition across health versus disease, but it focuses only on limited portions of microbial genomes. In contrast, shotgun metagenomics employs untargeted NGS sequencing to capture the complete range of microbial genomes present in a sample - bacteria, fungi, DNA viruses, and other microorganisms.

Even with NGS, short-read NGS techniques have limited utility for analyzing polyploid genomes. Technologies such as Pacific Biosciences (PacBio) and Oxford Nanopore offer long-read sequencing capabilities, which provide complete sequences of microbial genomes. These methods can resolve complex regions of the genome that short-read methods may miss, making them suitable for metagenomic studies.

After sequencing, bioinformatics tools such as MetaVelvet, MEGAHIT and MetaCompass are used to process raw data, remove low-quality reads, and assemble sequences into larger contigs. Shotgun metagenomic data can be assembled either without a reference (de novo), based on existing genomes, or through a combination of both methods.

Metatranscriptomics, like shotgun metagenomics, captures RNA from microbial cells and provides insights into gene expression. Similarly, metaproteomics - which targets the proteins produced by the microbiome in the sample, and metabolomics, are essential methods for studying microbial communities, linking a microbiome’s genetic potential to actual metabolic activity, products, and environmental interaction. Together, these approaches enhance our understanding of how microbial communities influence host metabolism and health, making them vital for research in nutrition, medicine, and related fields.

The rich data thus obtained now has to be analyzed and interpreted. The sheer amount of data produced by microbiome studies demands powerful bioinformatics tools for proper analysis.

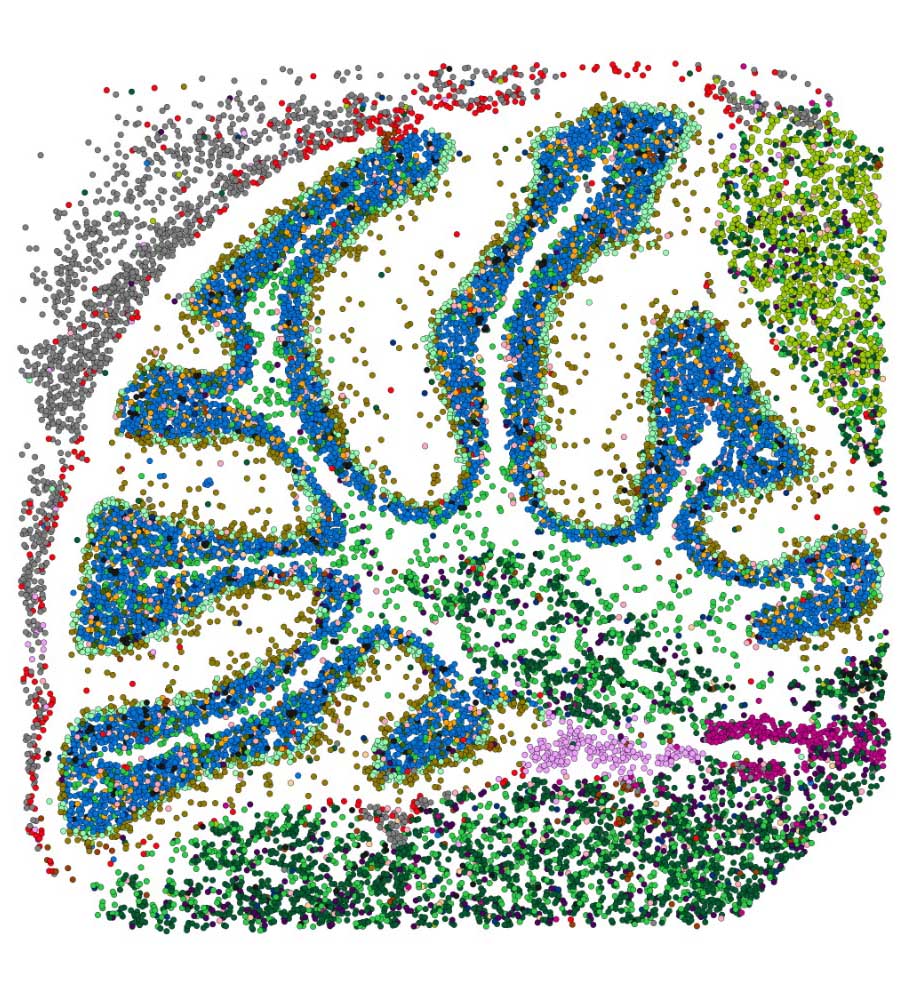

An essential aspect of data analysis is the assignment of taxonomic classifications. These can be done using machine learning techniques like RDP classifier, standard bioinformatics pipelines such as QIIME and Mothur, and reference databases like SILVA and Greengenes. Once we have a fairly comprehensive idea of the taxonomic and molecular composition of the samples being studied, we must visualize the data and statistically test any emerging patterns.

Microbiome differences are generally assessed through alpha and beta diversity metrics. Alpha diversity measures the diversity within a single sample and can be compared across different groups, such as comparing species diversity between diseased and control samples. Beta diversity, on the other hand, evaluates the diversity between samples, often using calculations that consider taxa abundance or phylogenetic relatedness. Several pipelines discussed above have provisions to calculate these metrics. Tools such as KEGG, GO, PICRUSt, or Tax4Fun are used to predict the metabolic functions of identified microbial genes, offering a glimpse into the functional capabilities of the microbiome. These tools allow coding gene identification, functional annotation, pathway enrichment analysis, clustering, scoring, and metabolic network derivation.

The complexity of microbiome data makes it difficult to apply conventional statistical methods and weed out confounding factors. Visualization techniques like principal coordinate analysis or component analysis are used to simplify the data and make it easy to visually spot patterns. Heat maps are also useful for comparison, and network analysis to illustrate interactions within the microbial community. Machine learning methods are becoming increasingly useful for comparing different samples and predicting outcomes in microbiome analysis.

Performing microbiome analysis is a multifaceted, complex process requiring different bioinformatic tools with keenly adjusted parameters. Using data from microbiome analysis effectively also requires organized metadata, standardized formats, specialized computing tools, and intuitive user interfaces. Strand is proficient at creating informatics solutions that can make this process seamless.

Strand partnered with a top 20 US pharmaceutical company to harmonize internal and external gene expression datasets from clinical collaborations, third-party sources and public repositories. Integrating internal data with external or publicly available datasets can enhance statistical power, enable comparative analysis, and drive discovery. However, differences in file formats, metadata, naming conventions, and platforms across disparate sources often make valid comparisons difficult, and hinders the extraction of meaningful clinical insights. Strand integrated the company’s internal data with external sources, organizing it into a hierarchical structure at Disease, Program, Study, Experiment, and Core Data levels, and creating a centralized repository of approximately 1,000 analysis-ready datasets. This repository features custom tagging for easy data retrieval, disease/tissue atlases, and visualizations for 170 datasets. The solution provided single-point access for all researchers and users, enabling streamlined data retrieval and ongoing data ingestion.

As the field evolves, our scientists at Strand are working to bring you cutting edge platforms that provide the precision and insights you need to advance your research.

Explore the rest of the blog series below: